Database partitioning guidance

general guidance about when to partition data and best practices.

Comparison of database sharding and partitioning

Database sharding is like horizontal partitioning. Both processes split the database into multiple groups of unique rows. Partitioning stores all data groups in the same computer, but database sharding spreads them across different computers.

Why partition data?

- Improve scalability. When you scale up a single database system, it will eventually reach a physical hardware limit. If you divide data across multiple partitions, each hosted on a separate server, you can scale out the system almost indefinitely.

- Improve performance. Data access operations on each partition take place over a smaller volume of data. Correctly done, partitioning can make your system more efficient. Operations that affect more than one partition can run in parallel.

- Improve security. In some cases, you can separate sensitive and nonsensitive data into different partitions and apply different security controls to the sensitive data.

- Provide operational flexibility. Partitioning offers many opportunities for fine-tuning operations, maximizing administrative efficiency, and minimizing cost. For example, you can define different strategies for management, monitoring, backup and restore, and other administrative tasks based on the importance of the data in each partition.

- Match the data store to the pattern of use. Partitioning allows each partition to be deployed on a different type of data store, based on cost and the built-in features that data store offers. For example, large binary data can be stored in blob storage, while more structured data can be held in a document database.

- Improve availability. Separating data across multiple servers avoids a single point of failure. If one instance fails, only the data in that partition is unavailable. Operations on other partitions can continue. For managed PaaS data stores, this consideration is less relevant, because these services are designed with built-in redundancy.

Database partitioning methods

Partitioning methods:

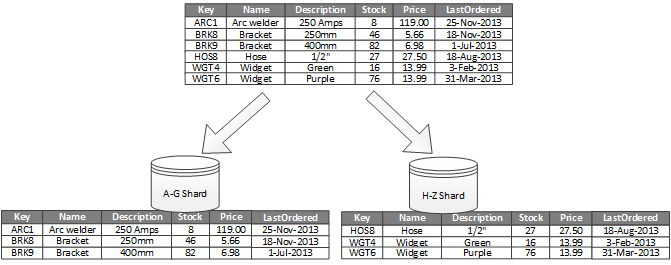

- Horizontal partitioning (often called sharding). In this strategy, each partition is a separate data store, but all partitions have the same schema. Each partition is known as a shard and holds a specific subset of the data, such as all the orders for a specific set of customers.

- Vertical partitioning. In this strategy, each partition holds a subset of the fields for items in the data store. The fields are divided according to their pattern of use. For example, frequently accessed fields might be placed in one vertical partition and less frequently accessed fields in another.

Horizontal partitioning (sharding)

The most important factor is the choice of a sharding key. It can be difficult to change the key after the system is in operation. The key must ensure that data is partitioned to spread the workload as evenly as possible across the shards.

The shards don’t have to be the same size. It’s more important to balance the number of requests. Some shards might be very large, but each item has a low number of access operations. Other shards might be smaller, but each item is accessed much more frequently. It’s also important to ensure that a single shard does not exceed the scale limits (in terms of capacity and processing resources) of the data store.

Avoid creating “hot” partitions that can affect performance and availability. For example, using the first letter of a customer’s name causes an unbalanced distribution, because some letters are more common. Instead, use a hash of a customer identifier to distribute data more evenly across partitions.

Choose a sharding key that minimizes any future requirements to split large shards, coalesce small shards into larger partitions, or change the schema. These operations can be very time consuming, and might require taking one or more shards offline while they are performed.

If shards are replicated, it might be possible to keep some of the replicas online while others are split, merged, or reconfigured. However, the system might need to limit the operations that can be performed during the reconfiguration. For example, the data in the replicas might be marked as read-only to prevent data inconsistences.

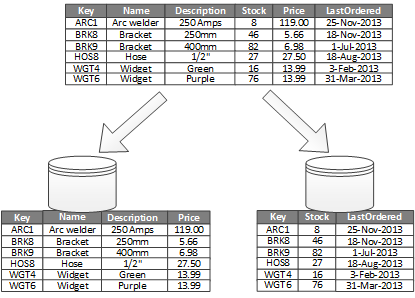

Vertical partitioning

The most common use for vertical partitioning is to reduce the I/O and performance costs associated with fetching items that are frequently accessed.

In this example, different properties of an item are stored in different partitions. One partition holds data that is accessed more frequently, including product name, description, and price. Another partition holds inventory data: the stock count and last-ordered date.

Other advantages of vertical partitioning:

- Relatively slow-moving data (product name, description, and price) can be separated from the more dynamic data (stock level and last ordered date). Slow moving data is a good candidate for an application to cache in memory.

- Sensitive data can be stored in a separate partition with additional security controls.

- Vertical partitioning can reduce the amount of concurrent access that’s needed.

Vertical partitioning operates at the entity level within a data store, partially normalizing an entity to break it down from a wide item to a set of narrow items. It is ideally suited for column-oriented data stores such as HBase and Cassandra.

Database Sharding

Database sharding does not create copies of the same information. Instead, it splits one database into multiple parts and stores them on different computers. Unlike replication, database sharding does not result in high availability. Sharding can be used in combination with replication to achieve both scale and high availability.

Partitioning is more a generic term for dividing data across tables or databases. Sharding is one specific type of partitioning, part of what is called horizontal partitioning.

Designing partitions for scalability

Follow these steps when designing partitions for scalability:

- Analyze the application to understand the data access patterns, such as the size of the result set returned by each query, the frequency of access, the inherent latency, and the server-side compute processing requirements. In many cases, a few major entities will demand most of the processing resources.

- Use this analysis to determine the current and future scalability targets, such as data size and workload. Then distribute the data across the partitions to meet the scalability target. For horizontal partitioning, choosing the right shard key is important to make sure distribution is even. For more information, see the sharding pattern.

- Make sure each partition has enough resources to handle the scalability requirements, in terms of data size and throughput. Depending on the data store, there might be a limit on the amount of storage space, processing power, or network bandwidth per partition. If the requirements are likely to exceed these limits, you might need to refine your partitioning strategy or split data out further, possibly combining two or more strategies.

- Monitor the system to verify that data is distributed as expected and that the partitions can handle the load. Actual usage does not always match what an analysis predicts. If so, it might be possible to rebalance the partitions, or else redesign some parts of the system to gain the required balance.

Designing partitions for query performance

Query performance can often be boosted by using smaller data sets and by running parallel queries. Each partition should contain a small proportion of the entire data set. This reduction in volume can improve the performance of queries.

Follow these steps when designing partitions for query performance:

- Examine the application requirements and performance:

- Use business requirements to determine the critical queries that must always perform quickly.

- Monitor the system to identify any queries that perform slowly.

- Find which queries are performed most frequently. Even if a single query has a minimal cost, the cumulative resource consumption could be significant.

- Partition the data that is causing slow performance:

- Limit the size of each partition so that the query response time is within target.

- If you use horizontal partitioning, design the shard key so that the application can easily select the right partition. This prevents the query from having to scan through every partition.

- Consider the location of a partition. If possible, try to keep data in partitions that are geographically close to the applications and users that access it.

- If an entity has throughput and query performance requirements, use functional partitioning based on that entity. If this still doesn’t satisfy the requirements, apply horizontal partitioning as well. In most cases, a single partitioning strategy will suffice, but in some cases it is more efficient to combine both strategies.

- Consider running queries in parallel across partitions to improve performance.

Designing partitions for availability

Partitioning data can improve the availability of applications by ensuring that the entire dataset does not constitute a single point of failure and that individual subsets of the dataset can be managed independently.

Consider the following factors that affect availability:

- How critical the data is to business operations. Identify which data is critical business information, such as transactions, and which data is less critical operational data, such as log files.

- Consider storing critical data in highly available partitions with an appropriate backup plan.

- Establish separate management and monitoring procedures for the different datasets.

- Place data that has the same level of criticality in the same partition so that it can be backed up together at an appropriate frequency. For example, partitions that hold transaction data might need to be backed up more frequently than partitions that hold logging or trace information.

- How individual partitions can be managed. Designing partitions to support independent management and maintenance provides several advantages. For example:

- If a partition fails, it can be recovered independently without applications that access data in other partitions.

- Partitioning data by geographical area allows scheduled maintenance tasks to occur at off-peak hours for each location. Ensure that partitions are not too large to prevent any planned maintenance from being completed during this period.

Whether to replicate critical data across partitions. This strategy can improve availability and performance, but can also introduce consistency issues. It takes time to synchronize changes with every replica. During this period, different partitions will contain different data values.

Application design considerations

Consider the following points when you design a data partitioning scheme:

-

Minimize cross-partition data access operations. Where possible, keep data for the most common database operations together in each partition to minimize cross-partition data access operations. Querying across partitions can be more time-consuming than querying within a single partition, but optimizing partitions for one set of queries might adversely affect other sets of queries. If you must query across partitions, minimize query time by running parallel queries and aggregating the results within the application. (This approach might not be possible in some cases, such as when the result from one query is used in the next query.)

-

Consider replicating static reference data. If queries use relatively static reference data, such as postal code tables or product lists, consider replicating this data in all of the partitions to reduce separate lookup operations in different partitions. This approach can also reduce the likelihood of the reference data becoming a “hot” dataset, with heavy traffic from across the entire system. However, there is an additional cost associated with synchronizing any changes to the reference data.

-

Minimize cross-partition joins. Where possible, minimize requirements for referential integrity across vertical and functional partitions. In these schemes, the application is responsible for maintaining referential integrity across partitions. Queries that join data across multiple partitions are inefficient because the application typically needs to perform consecutive queries based on a key and then a foreign key. Instead, consider replicating or de-normalizing the relevant data. If cross-partition joins are necessary, run parallel queries over the partitions and join the data within the application.

-

Embrace eventual consistency. Evaluate whether strong consistency is actually a requirement. A common approach in distributed systems is to implement eventual consistency. The data in each partition is updated separately, and the application logic ensures that the updates are all completed successfully. It also handles the inconsistencies that can arise from querying data while an eventually consistent operation is running.

-

Consider how queries locate the correct partition. If a query must scan all partitions to locate the required data, there is a significant impact on performance, even when multiple parallel queries are running. With vertical and functional partitioning, queries can naturally specify the partition. Horizontal partitioning, on the other hand, can make locating an item difficult, because every shard has the same schema. A typical solution to maintain a map that is used to look up the shard location for specific items. This map can be implemented in the sharding logic of the application, or maintained by the data store if it supports transparent sharding.

-

Consider periodically rebalancing shards. With horizontal partitioning, rebalancing shards can help distribute the data evenly by size and by workload to minimize hotspots, maximize query performance, and work around physical storage limitations. However, this is a complex task that often requires the use of a custom tool or process.

-

Replicate partitions. If you replicate each partition, it provides additional protection against failure. If a single replica fails, queries can be directed toward a working copy.

-

Avoid transactions that access data in multiple partitions. Some data stores implement transactional consistency and integrity for operations that modify data, but only when the data is located in a single partition. If you need transactional support across multiple partitions, you will probably need to implement this as part of your application logic because most partitioning systems do not provide native support.

Rebalancing partitions

As a system matures, you might have to adjust the partitioning scheme. For example, individual partitions might start getting a disproportionate volume of traffic and become hot, leading to excessive contention. Or you might have underestimated the volume of data in some partitions, causing some partitions to approach capacity limits.

Rebalancing is an administrative task that consists of two stages:

- Determine a new partitioning strategy.

- Which partitions need to be split (or possibly combined)?

- What is the new partition key?

- Migrate data from the old partitioning scheme to the new set of partitions.

Depending on the data store, you might be able to migrate data between partitions while they are in use. This is called online migration. If that’s not possible, you might need to make partitions unavailable while the data is relocated (offline migration).

Offline migration

Offline migration is typically simpler because it reduces the chances of contention occurring. Conceptually, offline migration works as follows:

- Mark the partition offline.

- Split-merge and move the data to the new partitions.

- Verify the data.

- Bring the new partitions online.

- Remove the old partition.

Optionally, you can mark a partition as read-only in step 1, so that applications can still read the data while it is being moved.

Online migration

Online migration is more complex to perform but less disruptive. The process is similar to offline migration, except the original partition is not marked offline. Depending on the granularity of the migration process (for example, item by item versus shard by shard), the data access code in the client applications might have to handle reading and writing data that’s held in two locations, the original partition and the new partition.

Data partitioning strategies

Partitioning Database

A single SQL database has a limit to the volume of data that it can contain. Throughput is constrained by architectural factors and the number of concurrent connections that it supports.

Each shard is implemented as a SQL database. A shard can hold more than one dataset.

To reduce latency and improve availability, you can replicate the global shard map manager database. you can configure active geo-replication to continuously copy data to databases in different regions.

A separate SQL database acts as a global shard map manager. The application connects to the shard map manager database to obtain a copy of the shard map. It caches the shard map locally, and uses the map to route data requests to the appropriate shard.

- A list shard map associates a single key to a shardlet. For example, in a multitenant system, the data for each tenant can be associated with a unique key and stored in its own shardlet. To guarantee isolation, each shardlet can be held within its own shard.

-

A range shard map associates a set of contiguous key values to a shardlet. For example, you can group the data for a set of tenants (each with their own key) within the same shardlet. This scheme is less expensive than the first, because tenants share data storage, but has less isolation.

The partitioning scheme can significantly affect the performance of your system. It can also affect the rate at which shards have to be added or removed, or that data must be repartitioned across shards. Consider the following points:

- Group data that is used together in the same shard, and avoid operations that access data from multiple shards. A shard is a SQL database in its own right, and cross-database joins must be performed on the client side.

- Don’t design a system that has dependencies between shards. Referential integrity constraints, triggers, and stored procedures in one database cannot reference objects in another.

- If you have reference data that is frequently used by queries, consider replicating this data across shards. This approach can remove the need to join data across databases. Ideally, such data should be static or slow-moving, to minimize the replication effort and reduce the chances of it becoming stale.

- Transactional operations are only supported for data within a shard, and not across shards. Transactions can span shardlets as long as they are part of the same shard. Therefore, if your business logic needs to perform transactions, either store the data in the same shard or implement eventual consistency.

Partitioning Table

Each entity stored in a table must provide a two-part key that includes:

- The partition key. This is a string value that determines the partition where Table storage will place the entity. All entities with the same partition key are stored in the same partition.

- The row key. This is a string value that identifies the entity within the partition. All entities within a partition are sorted lexically, in ascending order, by this key. The partition key/row key combination must be unique for each entity and cannot exceed 1 KB in length.

Assume a storage account contains three tables: Customer Info, Product Info, and Order Info. Each table has multiple partitions.

- In the Customer Info table, the data is partitioned according to the city where the customer is located. The row key contains the customer ID.

- In the Product Info table, products are partitioned by product category, and the row key contains the product number.

- In the Order Info table, the orders are partitioned by order date, and the row key specifies the time the order was received. All data is ordered by the row key in each partition.

Consider the following points when you design your entities for Table storage:

- Select a partition key and row key by how the data is accessed. Choose a partition key/row key combination that supports the majority of your queries. The most efficient queries retrieve data by specifying the partition key and the row key. Queries that specify a partition key and a range of row keys can be completed by scanning a single partition. This is relatively fast because the data is held in row key order. If queries don’t specify which partition to scan, every partition must be scanned.

- If an entity has one natural key, then use it as the partition key and specify an empty string as the row key. If an entity has a composite key consisting of two properties, select the slowest changing property as the partition key and the other as the row key. If an entity has more than two key properties, use a concatenation of properties to provide the partition and row keys.

MySQL

|

|

-i--interactive: Keep STDIN open even if not attached-t--tty: Allocate a pseudo-TTY 分配伪 TTY-d--detach: Run container in background and print container ID-p--publish: Publish a container’s port(s) to the host-e--env: Set environment variables

RANGE partitioning

|

|

|

|

|

|

Any valid query against a partitioned table can be rewritten with a PARTITION option to restrict the result to one or more desired partitions. You can use WHERE conditions, ORDER BY and LIMIT options, and so on. You can also use aggregate functions with HAVING and GROUP BY options.

|

|

Partition selection can also be used with joins.

|

|

Partition selection can also be used with DELETE statements and UPDATE statements.

|

|

LIST partitioning

|

|

HASH partitioning

Partitioning by HASH is used primarily to ensure an even distribution of data among a predetermined number of partitions.

The following statement creates a table that uses hashing on the store_id column and is divided into 4 partitions:

|

|

KEY partitioning

KEY takes only a list of zero or more column names. Any columns used as the partitioning key must comprise part or all of the table’s primary key, if the table has one. Where no column name is specified as the partitioning key, the table’s primary key is used, if there is one.

If there is no primary key but there is a unique key, then the unique key is used for the partitioning key.

|

|

|

|

PostgreSQL

The table that is divided is referred to as a partitioned table.

The partitioned table itself is a “virtual” table having no storage of its own. Instead, the storage belongs to partitions, which are otherwise-ordinary tables associated with the partitioned table. Each partition stores a subset of the data as defined by its partition bounds. All rows inserted into a partitioned table will be routed to the appropriate one of the partitions based on the values of the partition key column(s). Updating the partition key of a row will cause it to be moved into a different partition if it no longer satisfies the partition bounds of its original partition.

RANGE partitioning

|

|

Create Partitions

|

|

Create an index on the key column(s), as well as any other indexes you might want, on the partitioned table. (The key index is not strictly necessary, but in most scenarios it is helpful.) This automatically creates a matching index on each partition, and any partitions you create or attach later will also have such an index. An index or unique constraint declared on a partitioned table is “virtual” in the same way that the partitioned table is: the actual data is in child indexes on the individual partition tables.

|

|

In the above example we would be creating a new partition each month, so it might be wise to write a script that generates the required DDL automatically.

Set Up Constraints

We need to define constraints on each partition to ensure that data is correctly routed to the appropriate partition. In this example, we will use CHECK constraints on the sale_date column for each partition:

|

|

Insert Data into Partitions

Now, we can insert data into the sales table, and PostgreSQL will automatically route the data to the appropriate partition based on the sale_date:

|

|

Querying Data from Partitions

When querying data, PostgreSQL will automatically access only the relevant partitions based on the WHERE clause.

|

|

These queries will only access the appropriate partitions, resulting in improved query performance.

LIST partitioning

|

|

Create Partitions

|

|

Set Up Constraints

Since list partitioning is based on specific values, we don’t need CHECK constraints.

Insert Data into Partitions

Now, we can insert data into the products table, and PostgreSQL will automatically route the data to the appropriate partition based on the category.

|

|

Querying Data from Partitions

When querying data, PostgreSQL will automatically access only the relevant partition based on the WHERE clause.

|

|

HASH partitioning

|

|

Create Partitions

|

|

We use MODULUS and REMAINDER to specify the number of partitions (3 in this case) and the remainder value for each partition.

Insert Data into Partitions

Now, we can insert data into the orders table, and PostgreSQL will automatically route the data to the appropriate partition based on the hash value of the customer_id:

|

|

Querying Data from Partitions

When querying data, PostgreSQL will automatically access the appropriate partition based on the hash value of the customer_id.

|

|

Partitioning Blob

Partitioning storage queues

Partitioning Service Bus

Partitioning NoSQL

Partitioning Search

Partitioning Cache for Redis

Partitioning Event Hubs

Solutions

Traditional relational databases with Sharding Middleware

Sharding and partitioning are techniques to divide and scale large databases. Sharding distributes data across multiple servers, while partitioning splits tables within one server.

Table partitioning is a built-in, native feature in MySQL and PostgreSQL. Sharding is available via distributed database middleware.

Regarding performance and availability, traditional solutions that store data on a single data node in a centralized manner can no longer adapt to the massive data scenarios created by the Internet. Most relational database products use B+ tree indexes. When the data volume exceeds the threshold, the increase in the index depth leads to an increased disk I/O count, the substantially degrading query performance. In addition, highly concurrent access requests also turn the centralized database into the biggest bottleneck of the system.

Database sharding and read/write separation are the two common measures for heavy access traffic. Although table sharding can resolve the performance problems caused by massive data, it cannot resolve the problem of slow responsiveness caused by excessive requests to the same database. For this reason, database sharding is often implemented in horizontal sharding to handle the huge data volume and heavy access traffic. Read/write separation is another way to distribute traffic. However, you must consider the latency between data reading and data writing when designing the architecture.

ShardingSphere

Vitess

NoSQL

Since traditional relational databases cannot meet the requirements of the Internet, increasing numbers of attempts have been made to store data in NoSQL databases that natively support data distribution. However, NoSQL is not compatible with SQL Server and its ecosystem is yet to be improved. Therefore, NoSQL cannot replace relational databases, and the position of the relational databases is secure.

NewSQL

TiDB

VoltDB

Reference

数据库中间件ShardingSphere-ShardingProxy使用

Guide to PostgreSQL Table Partitioning

Mastering PostgreSQL Table Partitioning