What is an API gateway?

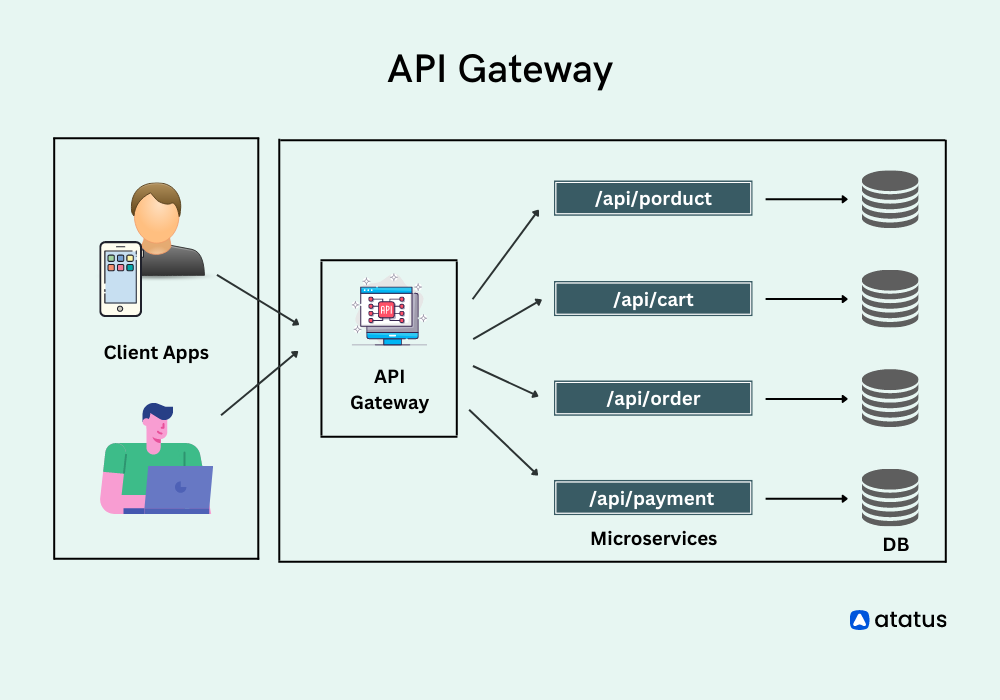

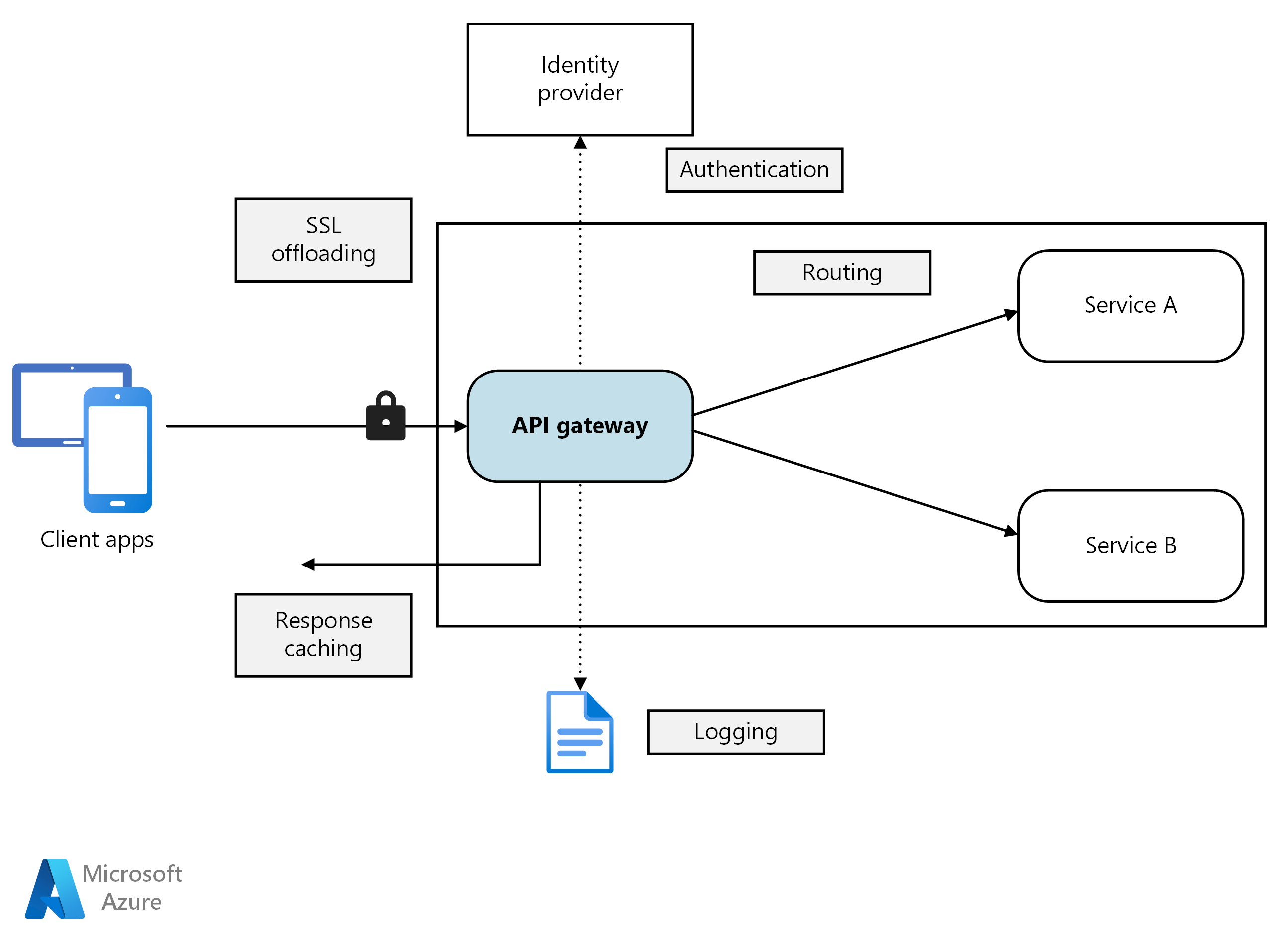

An API gateway sits between clients and services. It acts as a reverse proxy, routing requests from clients to services. It may also perform various cross-cutting tasks such as authentication, SSL termination, and rate limiting.

If you don’t deploy a gateway, clients must send requests directly to front-end services. However, there are some potential problems with exposing services directly to clients:

-

It can result in complex client code. The client must keep track of multiple endpoints, and handle failures in a resilient way.

-

It creates coupling between the client and the backend. The client needs to know how the individual services are decomposed. That makes it harder to maintain the client and also harder to refactor services.

-

A single operation might require calls to multiple services. That can result in multiple network round trips between the client and the server, adding significant latency.

-

Each public-facing service must handle concerns such as authentication, SSL, and client rate limiting.

A gateway helps to address these issues by decoupling clients from services. Gateways can perform a number of different functions, and you may not need all of them. The functions can be grouped into the following design patterns:

-

Gateway Routing

Use the gateway as a reverse proxy to route requests to one or more backend services, using layer 7 routing. The gateway provides a single endpoint for clients, and helps to decouple clients from services.

-

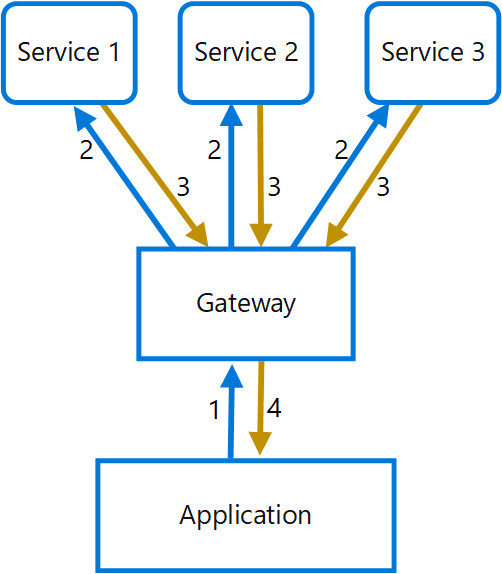

Gateway Aggregation

Use the gateway to aggregate multiple individual requests into a single request. This pattern applies when a single operation requires calls to multiple backend services. The client sends one request to the gateway. The gateway dispatches requests to the various backend services, and then aggregates the results and sends them back to the client. This helps to reduce chattiness between the client and the backend.

-

Gateway Offloading

Use the gateway to offload functionality from individual services to the gateway, particularly cross-cutting concerns. It can be useful to consolidate these functions into one place, rather than making every service responsible for implementing them.

Implementing security and cross-cutting concerns like security and authorization on every microservice can require significant development effort. A possible approach is to have those services within the Docker host or internal cluster to restrict direct access to them from the outside, and to implement those cross-cutting concerns in a centralized place, like an API Gateway.

Here are some examples of functionality that could be offloaded to a gateway:

- SSL termination

- Authentication

- IP allowlist or blocklist

- Client rate limiting (throttling)

- Logging and monitoring

- Response caching

- Web application firewall

- GZIP compression

- Servicing static content

Choosing a gateway technology

Here are some options for implementing an API gateway in your application.

- Reverse proxy server. Nginx and HAProxy are popular reverse proxy servers that support features such as load balancing, SSL, and layer 7 routing.

- Service mesh ingress controller. If you are using a service mesh such as Linkerd or Istio, consider the features that are provided by the ingress controller for that service mesh. For example, the Istio ingress controller supports layer 7 routing, HTTP redirects, retries, and other features.

Depending on the features that you need, you might deploy more than one gateway.

The gateway is a potential bottleneck or single point of failure in the system, so always deploy at least two replicas for high availability. You may need to scale out the replicas further, depending on the load.

Deploying Nginx or HAProxy to Kubernetes

You can deploy Nginx or HAProxy to Kubernetes as a ReplicaSet or DaemonSet that specifies the Nginx or HAProxy container image. Use a ConfigMap to store the configuration file for the proxy, and mount the ConfigMap as a volume. Create a service of type LoadBalancer to expose the gateway through an Azure Load Balancer.

Deploying Ingress Controller to Kubernetes

An alternative is to create an Ingress Controller. An Ingress Controller is a Kubernetes resource that deploys a load balancer or reverse proxy server. Several implementations exist, including Nginx and HAProxy. A separate resource called an Ingress defines settings for the Ingress Controller, such as routing rules and TLS certificates. That way, you don’t need to manage complex configuration files that are specific to a particular proxy server technology.

Using multiple custom API Gateways

Usually it isn’t a good idea to have a single API Gateway aggregating all the internal microservices of your application.

That fact can be an important risk because your API Gateway service will be growing and evolving based on many different requirements from the client apps. Eventually, it will be bloated because of those different needs and effectively it could be similar to a monolithic application or monolithic service. That’s why it’s very much recommended to split the API Gateway in multiple services or multiple smaller API Gateways, one per client app form-factor type, for instance.

Therefore, the API Gateways should be segregated based on business boundaries and the client apps and not act as a single aggregator for all the internal microservices.

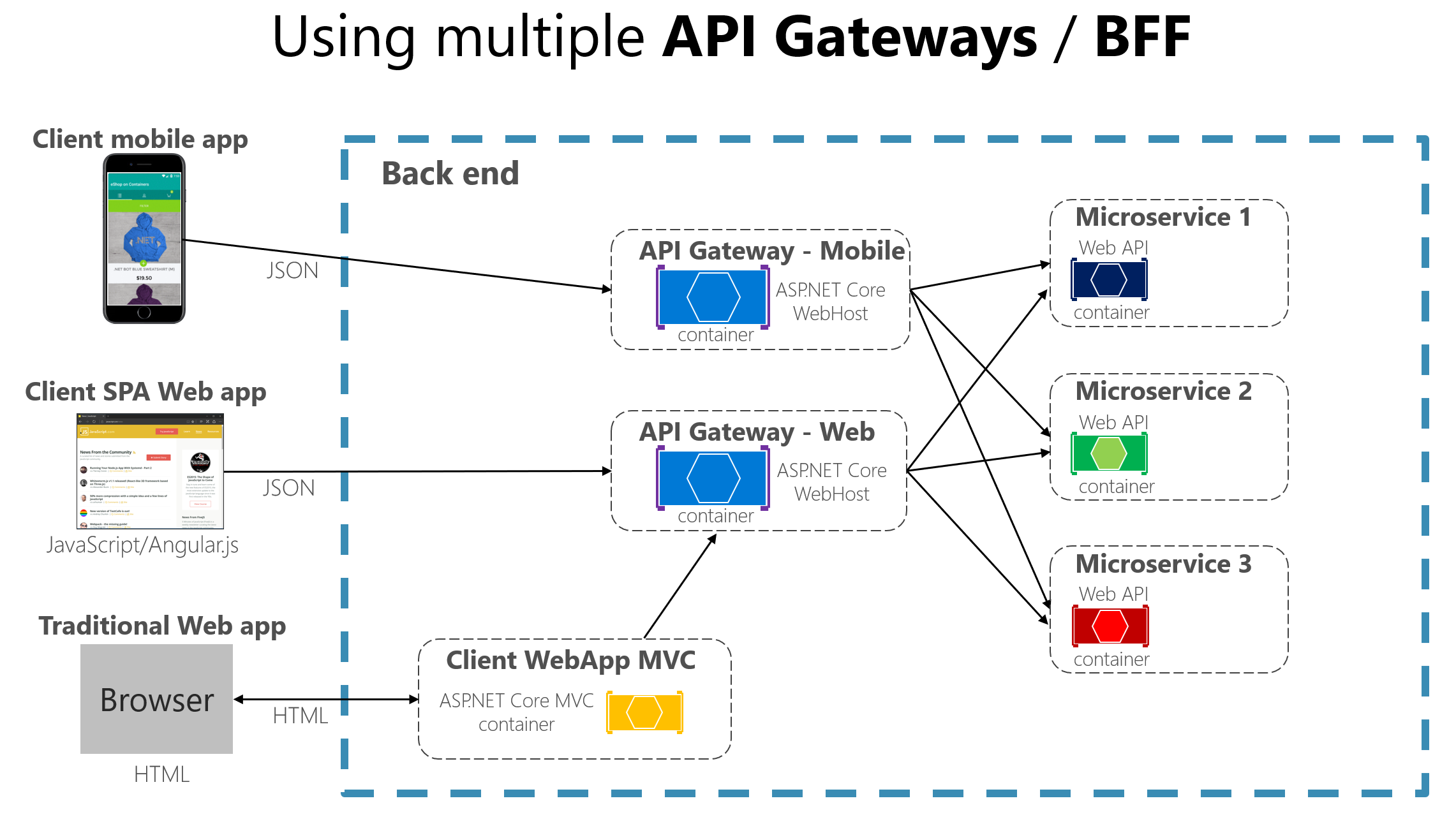

When splitting the API Gateway tier into multiple API Gateways, if your application has multiple client apps, that can be a primary pivot when identifying the multiple API Gateways types, so that you can have a different facade for the needs of each client app. This case is a pattern named “Backend for Frontend” (BFF) where each API Gateway can provide a different API tailored for each client app type, possibly even based on the client form factor by implementing specific adapter code which underneath calls multiple internal microservices, as shown in the following image:

In this case, the boundaries identified for each API Gateway are based purely on the “Backend for Frontend” (BFF) pattern, hence based just on the API needed per client app. But in larger applications you should also go further and create other API Gateways based on business boundaries as a second design pivot.

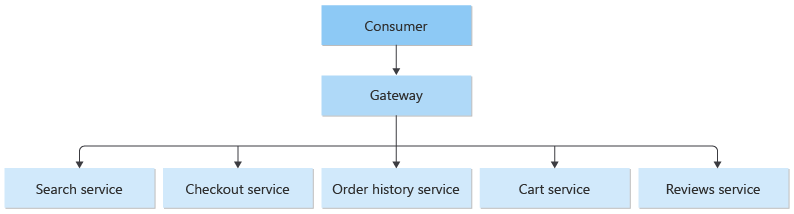

Gateway routing pattern

Multiple disparate services

The gateway routing pattern is useful in this scenario where a client is consuming multiple services. If a service is consolidated, decomposed or replaced, the client doesn’t necessarily require updating. It can continue making requests to the gateway, and only the routing changes.

Multiple instances of the same service

The client is unaware of an increase or decreases in the number of services. Service instances can be deployed in a single or multiple regions. The Geode pattern details how a multi-region, active-active deployment can improve latency and increase availability of a service.

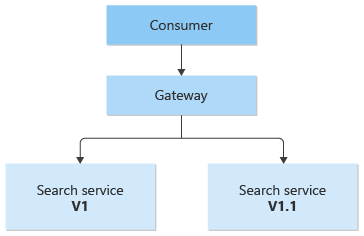

Multiple versions of the same service

This pattern can be used for deployments, by allowing you to manage how updates are rolled out to users. When a new version of your service is deployed, it can be deployed in parallel with the existing version. Routing lets you control what version of the service is presented to the clients, giving you the flexibility to use various release strategies, whether incremental, parallel, or complete rollouts of updates. Any issues discovered after the new service is deployed can be quickly reverted by making a configuration change at the gateway, without affecting clients.

Gateway aggregation pattern

Use a gateway to reduce chattiness between the client and the services. The gateway receives client requests, dispatches requests to the various backend systems, and then aggregates the results and sends them back to the requesting client.

This pattern can reduce the number of requests that the application makes to backend services, and improve application performance over high-latency networks.

Gateway offloading pattern

Some features are commonly used across multiple services, and these features require configuration, management, and maintenance. A shared or specialized service that is distributed with every application deployment increases the administrative overhead and increases the likelihood of deployment error.

Properly handling security issues (token validation, encryption, SSL certificate management) and other complex tasks can require team members to have highly specialized skills.

Offload some features into a gateway, particularly cross-cutting concerns such as certificate management, authentication, SSL termination, monitoring, protocol translation, or throttling.

Switching to an API gateway’s auth system from an existing application’s auth implementation.

Drawbacks of the API Gateway pattern

- Using a microservices API Gateway creates an additional possible single point of failure.

- If not scaled out properly, the API Gateway can become a bottleneck.

- An API Gateway requires additional development cost and future maintenance if it includes custom logic and data aggregation. Developers must update the API Gateway in order to expose each microservice’s endpoints. Moreover, implementation changes in the internal microservices might cause code changes at the API Gateway level. However, if the API Gateway is just applying security, logging, and versioning, this additional development cost might not apply.

Reference

Use API gateways in microservices

The API gateway pattern versus the Direct client-to-microservice communication